centos部署harbor+portainer

[TOC]

安装docker和docker-compose

- 本次测试有两台服务器,一台为harbor+portainer+nginx负载均衡,另外一台为两个nginx分别展示不同的内容

- 下载docker和docker-compose安装包,推荐源码安装

- 安装docker

[root@nginx1 tools]# tar xf docker-24.0.4.tgz

[root@nginx1 tools]# cd docker

[root@nginx1 docker]# ls

containerd containerd-shim ctr docker dockerd docker-init docker-proxy runc

[root@nginx1 docker]# cp ./* /usr/bin/

[root@nginx1 docker]# cp ./* /usr/sbin/

[root@nginx1 docker]# cp ./* /usr/local/bin/

[root@nginx1 docker]# cp ./* /usr/local/sbin/

[root@nginx1 docker]# vim /etc/systemd/system/docker.service #在这个文件内写入新内容如下

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network-online.target firewalld.service

Wants=network-online.target

[Service]

Type=notify

ExecStart=/usr/bin/dockerd -H unix://var/run/docker.sock

ExecReload=/bin/kill -s HUP $MAINPID

TimeoutSec=0

RestartSec=2

Restart=always

StartLimitBurst=3

StartLimitInterval=60s

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

TasksMax=infinity

Delegate=yes

KillMode=process

[Install]

WantedBy=multi-user.target

[root@nginx1 docker]# service docker start #启动docker

[root@nginx1 docker]# systemctl enable docker.service #设置docker开机启动

[root@nginx1 docker]# ps -ef |grep docker

root 1390 1 0 20:28 ? 00:00:11 /usr/bin/dockerd -H tcp://0.0.0.0:2375 -H unix://var/run/docker.sock

root 1818 1390 0 20:28 ? 00:00:09 containerd --config /var/run/docker/containerd/containerd.toml --log-level info

root 14197 14060 0 23:03 pts/3 00:00:00 grep --color=auto docker- 安装docker-compose

[root@nginx1 docker]# chmod +x docker-compose

[root@nginx1 docker]# cp docker-compose /usr/bin/

[root@nginx1 docker]# cp docker-compose /usr/sbin/

[root@nginx1 docker]# cp docker-compose /usr/local/bin/

[root@nginx1 docker]# cp docker-compose /usr/local/sbin/下载并运行harbor镜像

- 下载镜像

GitHub下载地址:https://github.com/goharbor/harbor/releases/download/v2.3.4/harbor-offline-installer-v2.3.4.tgz

网站下载地址:https://install.jishuliu.cn/docker/harbor-offline-installer-v2.6.1.tgz- 安装harbor

[root@nginx1 tools]# tar xf harbor-offline-installer-v2.3.4.tgz

[root@nginx1 tools]# ls

docker-24.0.4.tgz harbor harbor-offline-installer-v2.3.4.tgz lvs.tar.gz

[root@nginx1 tools]# cd harbor

[root@nginx1 harbor]# cp harbor.yml.tmpl harbor.yml

#编辑harbor.yml修改以下内容:

hostname: 改为你自己的主机名

harbor_admin_password: 登录harbor的密码

注释掉以下几行:

#https:

# https port for harbor, default is 443

# port: 443

# # The path of cert and key files for nginx

# certificate: /your/certificate/path

# private_key: /your/private/key/path

[root@nginx1 harbor]# ./install.sh

[Step 0]: checking if docker is installed ...

Note: docker version: 19.03.9

[Step 1]: checking docker-compose is installed ...

Note: docker-compose version: 1.23.2

[Step 2]: loading Harbor images ...

103405848fd2: Loading layer [==================================================>] 37.24MB/37.24MB

bab588f83459: Loading layer [==================================================>] 8.75MB/8.75MB

5f0fc8804b08: Loading layer [==================================================>] 11.64MB/11.64MB

c28f2daf5359: Loading layer [==================================================>] 1.688MB/1.688MB

Loaded image: goharbor/harbor-portal:v2.3.4

e1768f3b0fc8: Loading layer [==================================================>] 8.75MB/8.75MB

Loaded image: goharbor/nginx-photon:v2.3.4

80c494db90e8: Loading layer [==================================================>] 6.82MB/6.82MB

f7c774b190f5: Loading layer [==================================================>] 6.219MB/6.219MB

3f9238d93e31: Loading layer [==================================================>] 15.88MB/15.88MB

357454838775: Loading layer [==================================================>] 29.29MB/29.29MB

bbdcee8bdadb: Loading layer [==================================================>] 22.02kB/22.02kB

ac89f4dce657: Loading layer [==================================================>] 15.88MB/15.88MB

Loaded image: goharbor/notary-server-photon:v2.3.4

305b24ea374e: Loading layer [==================================================>] 7.363MB/7.363MB

55dcab863ae7: Loading layer [==================================================>] 4.096kB/4.096kB

4832b43b9049: Loading layer [==================================================>] 3.072kB/3.072kB

c5065fd5a724: Loading layer [==================================================>] 31.52MB/31.52MB

c1732b627bc6: Loading layer [==================================================>] 11.39MB/11.39MB

84e3371d6d71: Loading layer [==================================================>] 43.7MB/43.7MB

Loaded image: goharbor/trivy-adapter-photon:v2.3.4

0bda5dd4507f: Loading layer [==================================================>] 9.918MB/9.918MB

9b8b0a75131b: Loading layer [==================================================>] 3.584kB/3.584kB

8a27a2e44308: Loading layer [==================================================>] 2.56kB/2.56kB

c2d79ade2e58: Loading layer [==================================================>] 73.36MB/73.36MB

a7efb206c673: Loading layer [==================================================>] 5.632kB/5.632kB

a0151a9b651b: Loading layer [==================================================>] 94.72kB/94.72kB

077455b7a6e9: Loading layer [==================================================>] 11.78kB/11.78kB

77235bb05a07: Loading layer [==================================================>] 74.26MB/74.26MB

d55c045b5185: Loading layer [==================================================>] 2.56kB/2.56kB

Loaded image: goharbor/harbor-core:v2.3.4

1dcd2f87d99c: Loading layer [==================================================>] 1.096MB/1.096MB

5991768c62a8: Loading layer [==================================================>] 5.888MB/5.888MB

4cbb6847a67d: Loading layer [==================================================>] 173.7MB/173.7MB

437252ecb71f: Loading layer [==================================================>] 15.73MB/15.73MB

324517377bf0: Loading layer [==================================================>] 4.096kB/4.096kB

4697090444de: Loading layer [==================================================>] 6.144kB/6.144kB

19cb7f4e8295: Loading layer [==================================================>] 3.072kB/3.072kB

eb573ba4e927: Loading layer [==================================================>] 2.048kB/2.048kB

a30648a5fa3e: Loading layer [==================================================>] 2.56kB/2.56kB

65ab99d7c381: Loading layer [==================================================>] 2.56kB/2.56kB

9395849bf38f: Loading layer [==================================================>] 2.56kB/2.56kB

23a2711d2570: Loading layer [==================================================>] 8.704kB/8.704kB

Loaded image: goharbor/harbor-db:v2.3.4

2fcbfe43743b: Loading layer [==================================================>] 9.918MB/9.918MB

71f1cf1a21e7: Loading layer [==================================================>] 3.584kB/3.584kB

a5fd6aea12f3: Loading layer [==================================================>] 2.56kB/2.56kB

5d286dafbc99: Loading layer [==================================================>] 82.47MB/82.47MB

421d40a4b24e: Loading layer [==================================================>] 83.27MB/83.27MB

Loaded image: goharbor/harbor-jobservice:v2.3.4

a95079faecd9: Loading layer [==================================================>] 6.825MB/6.825MB

baf6f07b0d35: Loading layer [==================================================>] 4.096kB/4.096kB

5021c842bb8d: Loading layer [==================================================>] 3.072kB/3.072kB

964d95b989da: Loading layer [==================================================>] 19.02MB/19.02MB

f75de434d758: Loading layer [==================================================>] 19.81MB/19.81MB

Loaded image: goharbor/registry-photon:v2.3.4

6451d64104a0: Loading layer [==================================================>] 165.1MB/165.1MB

00794002f84b: Loading layer [==================================================>] 57.03MB/57.03MB

760f43153bcd: Loading layer [==================================================>] 2.56kB/2.56kB

4920fd15af6e: Loading layer [==================================================>] 1.536kB/1.536kB

d337336c9729: Loading layer [==================================================>] 12.29kB/12.29kB

5f17a57a5e01: Loading layer [==================================================>] 2.884MB/2.884MB

05ecc6b370ea: Loading layer [==================================================>] 297kB/297kB

Loaded image: goharbor/prepare:v2.3.4

b370aa55f6bb: Loading layer [==================================================>] 6.825MB/6.825MB

c40e1854804c: Loading layer [==================================================>] 4.096kB/4.096kB

62c713c68f94: Loading layer [==================================================>] 19.02MB/19.02MB

067cb9d13dc2: Loading layer [==================================================>] 3.072kB/3.072kB

da711fd41a09: Loading layer [==================================================>] 25.43MB/25.43MB

61af5bc5684d: Loading layer [==================================================>] 45.24MB/45.24MB

Loaded image: goharbor/harbor-registryctl:v2.3.4

1fce02e2f0b2: Loading layer [==================================================>] 9.918MB/9.918MB

90182ef8d6af: Loading layer [==================================================>] 17.71MB/17.71MB

30cf2783eb4e: Loading layer [==================================================>] 4.608kB/4.608kB

c8fa87f0c432: Loading layer [==================================================>] 18.5MB/18.5MB

Loaded image: goharbor/harbor-exporter:v2.3.4

1bbdf18315cc: Loading layer [==================================================>] 6.82MB/6.82MB

332423af2705: Loading layer [==================================================>] 6.219MB/6.219MB

a2024685b4fa: Loading layer [==================================================>] 14.47MB/14.47MB

a04184f058e2: Loading layer [==================================================>] 29.29MB/29.29MB

8fec5d89081c: Loading layer [==================================================>] 22.02kB/22.02kB

da3e11c34f87: Loading layer [==================================================>] 14.47MB/14.47MB

Loaded image: goharbor/notary-signer-photon:v2.3.4

01d27e9ffb2b: Loading layer [==================================================>] 125.5MB/125.5MB

dc823a6e78ed: Loading layer [==================================================>] 3.584kB/3.584kB

65b4a979ece5: Loading layer [==================================================>] 3.072kB/3.072kB

bd00d96da856: Loading layer [==================================================>] 2.56kB/2.56kB

5270920b2bb1: Loading layer [==================================================>] 3.072kB/3.072kB

9c736a3f305b: Loading layer [==================================================>] 3.584kB/3.584kB

e3053dfef34c: Loading layer [==================================================>] 19.97kB/19.97kB

Loaded image: goharbor/harbor-log:v2.3.4

56dfaad4f3ae: Loading layer [==================================================>] 121.4MB/121.4MB

1e54038c4760: Loading layer [==================================================>] 3.072kB/3.072kB

3283554b0538: Loading layer [==================================================>] 59.9kB/59.9kB

607e2816db21: Loading layer [==================================================>] 61.95kB/61.95kB

Loaded image: goharbor/redis-photon:v2.3.4

a18bcd8df6d3: Loading layer [==================================================>] 6.824MB/6.824MB

0dcfc4641990: Loading layer [==================================================>] 67.47MB/67.47MB

88be11c9e4c9: Loading layer [==================================================>] 3.072kB/3.072kB

3a99925cf064: Loading layer [==================================================>] 4.096kB/4.096kB

a426fee0fb8a: Loading layer [==================================================>] 68.26MB/68.26MB

Loaded image: goharbor/chartmuseum-photon:v2.3.4

[Step 3]: preparing environment ...

[Step 4]: preparing harbor configs ...

prepare base dir is set to /root/tools/harbor

WARNING:root:WARNING: HTTP protocol is insecure. Harbor will deprecate http protocol in the future. Please make sure to upgrade to https

Generated configuration file: /config/portal/nginx.conf

Generated configuration file: /config/log/logrotate.conf

Generated configuration file: /config/log/rsyslog_docker.conf

Generated configuration file: /config/nginx/nginx.conf

Generated configuration file: /config/core/env

Generated configuration file: /config/core/app.conf

Generated configuration file: /config/registry/config.yml

Generated configuration file: /config/registryctl/env

Generated configuration file: /config/registryctl/config.yml

Generated configuration file: /config/db/env

Generated configuration file: /config/jobservice/env

Generated configuration file: /config/jobservice/config.yml

Generated and saved secret to file: /data/secret/keys/secretkey

Successfully called func: create_root_cert

Generated configuration file: /compose_location/docker-compose.yml

Clean up the input dir

[Step 5]: starting Harbor ...

Creating network "harbor_harbor" with the default driver

Creating harbor-log ... done

Creating harbor-portal ... done

Creating harbor-db ... done

Creating redis ... done

Creating registryctl ... done

Creating registry ... done

Creating harbor-core ... done

Creating harbor-jobservice ... done

Creating nginx ... done

✔ ----Harbor has been installed and started successfully.----

##出现started successfully.表示已经启动

[root@nginx1 harbor]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

cc41f1f38620 goharbor/nginx-photon:v2.3.4 "nginx -g 'daemon of…" 3 minutes ago Up 3 minutes (healthy) 0.0.0.0:80->8080/tcp nginx

7ad1e196cf76 goharbor/harbor-jobservice:v2.3.4 "/harbor/entrypoint.…" 3 minutes ago Up 3 minutes (healthy) harbor-jobservice

01db8e3248c0 goharbor/harbor-core:v2.3.4 "/harbor/entrypoint.…" 3 minutes ago Up 3 minutes (healthy) harbor-core

de4b1bbca967 goharbor/harbor-portal:v2.3.4 "nginx -g 'daemon of…" 3 minutes ago Up 3 minutes (healthy) harbor-portal

f0625517a67a goharbor/registry-photon:v2.3.4 "/home/harbor/entryp…" 3 minutes ago Up 3 minutes (healthy) registry

15a66395d15d goharbor/harbor-registryctl:v2.3.4 "/home/harbor/start.…" 3 minutes ago Up 3 minutes (healthy) registryctl

eeb4144dff49 goharbor/harbor-db:v2.3.4 "/docker-entrypoint.…" 3 minutes ago Up 3 minutes (healthy) harbor-db

d7610f7aafba goharbor/redis-photon:v2.3.4 "redis-server /etc/r…" 3 minutes ago Up 3 minutes (healthy) redis

5149467660bf goharbor/harbor-log:v2.3.4 "/bin/sh -c /usr/loc…" 3 minutes ago Up 3 minutes (healthy) 127.0.0.1:1514->10514/tcp harbor-log

e2119600a7c8 portainer/portainer "/portainer" 24 minutes ago Up 21 minutes 0.0.0.0:8080->9000/tcp prtainer

[root@nginx1 harbor]#

#浏览器访问主机IP即可登录,默认用户名为admin,默认密码为Harbor12345- 所有服务器登录harbor

[root@harbor docker]# cd /etc/docker

[root@harbor docker]# vim daemon.json

{

"registry-mirrors": ["http://192.168.9.21"]

}

[root@harbor docker]# vim /etc/systemd/system/docker.service #修改ExecStart行,在dockerd后面新增--insecure-registry 192.168.1.21其中192.168.1.21为harbor的服务器IP

[root@harbor docker]# systemctl daemon-reload

[root@harbor docker]# service docker restart

[root@harbor harbor]# docker login 192.168.1.21

Username: admin

Password:

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

#登录成功,上面的warning不用管在本地下载nginx镜像并上传到harbor

- 在本文中将使用portainer部署nginx负载均衡所以需要先下载nginx镜像

[root@nginx ~]# docker pull nginx

Using default tag: latest

latest: Pulling from library/nginx

eff15d958d66: Pull complete

1e5351450a59: Pull complete

2df63e6ce2be: Pull complete

9171c7ae368c: Pull complete

020f975acd28: Pull complete

266f639b35ad: Pull complete

Digest: sha256:097c3a0913d7e3a5b01b6c685a60c03632fc7a2b50bc8e35bcaa3691d788226e

Status: Downloaded newer image for nginx:latest

docker.io/library/nginx:latest

[root@nginx ~]# docker tag nginx:latest 192.168.1.21/nginx/nginx:latest

[root@nginx ~]# docker push 192.168.1.21/nginx/nginx:latest

The push refers to repository [192.168.1.21/nginx/nginx]

8525cde30b22: Pushed

1e8ad06c81b6: Pushed

49eeddd2150f: Pushed

ff4c72779430: Pushed

37380c5830fe: Pushed

e1bbcf243d0e: Pushed

latest: digest: sha256:2f14a471f2c2819a3faf88b72f56a0372ff5af4cb42ec45aab00c03ca5c9989f size: 1570

[root@nginx ~]#

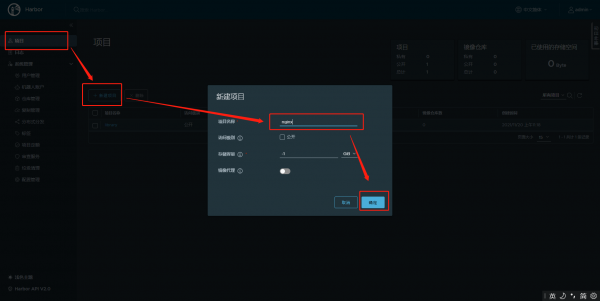

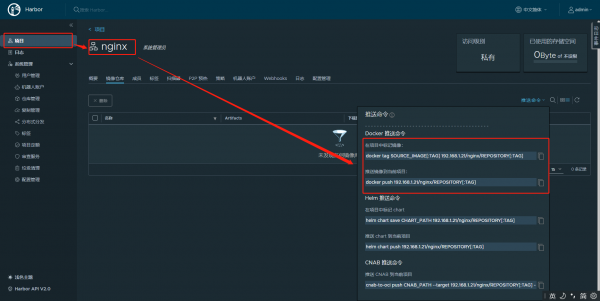

推送完成后可以在浏览器查看下载并运行portainer编排中心

- 在线下载镜像并启动

[root@harbor ~]# docker pull portainer/portainer

Using default tag: latest

latest: Pulling from portainer/portainer

94cfa856b2b1: Pull complete

49d59ee0881a: Pull complete

a2300fd28637: Pull complete

Digest: sha256:fb45b43738646048a0a0cc74fcee2865b69efde857e710126084ee5de9be0f3f

Status: Downloaded newer image for portainer/portainer:latest

docker.io/portainer/portainer:latest

[root@harbor ~]# docker images |grep portainer

portainer/portainer latest 580c0e4e98b0 8 months ago 79.1MB

[root@harbor ~]# docker run -d -p 9000:9000 --name portainer --restart=always -v /var/run/docker.sock:/var/run/docker.sock -v portainer_data:/data portainer/portainer

d8dfe2dc57d41a9b12da62cec75cc52fac1e447767cbdfeddacba292b04f193c

[root@harbor ~]# docker ps |grep portainer

d8dfe2dc57d4 portainer/portainer "/portainer" 4 minutes ago Up 4 minutes 0.0.0.0:9000->9000/tcp portainer- 离线部署

下载镜像包:http://kodbox.jishuliu.cn/?explorer/share/fileDownload&shareID=75qriMgg&path=%7BshareItemLink%3A75qriMgg%7D%2Fportainer%2Fportainer.tar&s=IAUvt

[root@harbor ~]# docker load -i portainer.tar

8dfce63a7397: Loading layer [==================================================>] 217.6kB/217.6kB

11bdf2a940a7: Loading layer [==================================================>] 1.536kB/1.536kB

658693958bcb: Loading layer [==================================================>] 78.9MB/78.9MB

Loaded image: portainer/portainer:latest

[root@harbor ~]# docker run -d -p 9000:9000 --name portainer --restart=always -v /var/run/docker.sock:/var/run/docker.sock -v portainer_data:/data portainer/portainer

d8dfe2dc57d41a9b12da62cec75cc52fac1e447767cbdfeddacba292b04f193c

[root@harbor ~]# docker ps |grep portainer

d8dfe2dc57d4 portainer/portainer "/portainer" 4 minutes ago Up 4 minutes 0.0.0.0:9000->9000/tcp portainer- 创建swarm集群

[root@harbor ~]# docker swarm init

Swarm initialized: current node (kxv8tq250erfho1y9e4dgzggl) is now a manager.

To add a worker to this swarm, run the following command:

docker swarm join --token SWMTKN-1-3rxl007mynbd0kfs5rr1uma074y49rvgekla1domdadit1rxvc-1axs8s5iwcwz5ee8etzysuo9l 192.168.1.21:2377 #此命令为别的docker服务器添加到当前集群执行的命令

To add a manager to this swarm, run 'docker swarm join-token manager' and follow the instructions.- 其他服务器键入swarm集群

[root@nginx ~]# docker swarm join --token SWMTKN-1-3rxl007mynbd0kfs5rr1uma074y49rvgekla1domdadit1rxvc-1axs8s5iwcwz5ee8etzysuo9l 192.168.1.21:2377

This node joined a swarm as a worker.

[root@nginx ~]#

加入后可以在创建集群的服务器执行以下命令查看集群内的服务器

[root@harbor ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

kxv8tq250erfho1y9e4dgzggl * harbor Ready Active Leader 19.03.9

9605za9g2kzda5vij8xkojgrs nginx Ready Active 19.03.9浏览器使用portainer创建容器

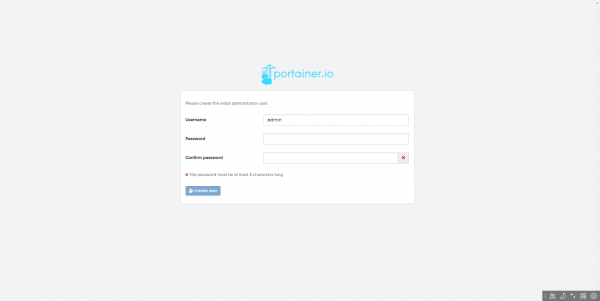

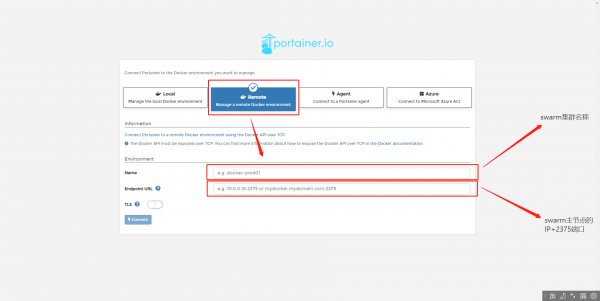

配置portainer并添加一个镜像仓库

- 浏览器访问IP+9000

-

设置账号密码

-

配置swarm集群

- 配置一个镜像仓库

在服务器本地创建nginx的目录

- 在第二台服务器创建本地日志路径并创建静态页面及nginx配置文件的内容

#创建两个日志文件

[root@nginx ~]# touch /data/nginx/logs/logs{1,2}/access.log

[root@nginx ~]# touch /data/nginx/logs/logs{1,2}/error.log

#创建一个index.html文件分别执行

[root@nginx ~]# touch /data/nginx/index/index2/index.html

#向两个index.html文件插入,第一台服务器内容为nginx1,第二台为nginx2

[root@nginx ~]# echo nginx1 > /data/nginx/index/index2/index.html

[root@nginx ~]# echo nginx2 > /data/nginx/index/index2/index.html

[root@nginx ~]# cd /data/nginx/index/

[root@nginx index]# cat index2/index.html

nginx2

[root@nginx index]# cat index1/index.html

nginx1

[root@nginx index]# cd ../conf

[root@nginx conf]# cat conf1/nginx.conf

user root;

worker_processes auto;

error_log /var/log/nginx/error.log notice;

pid /var/run/nginx.pid;

events {

worker_connections 1024;

}

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

keepalive_timeout 65;

server {

listen 80; #此处使用80端口

server_name localhost;

location / {

root /usr/local/web/; #静态页面目录

}

}

}

[root@nginx conf]# cat conf2/nginx.conf

user root;

worker_processes auto;

error_log /var/log/nginx/error.log notice;

pid /var/run/nginx.pid;

events {

worker_connections 1024;

}

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

keepalive_timeout 65;

server {

listen 81; #此处使用81端口

server_name localhost;

location / {

root /usr/local/web/; #静态页面目录

}

}

}

[root@nginx conf]# chmod -R 750 /data/nginx/- 在harbor和portainer创建负载均衡的配置文件及日志路径

[root@harbor ~]# mkdir -p /data/nginx/conf/

[root@harbor ~]# mkdir -p /data/nginx/logs

[root@harbor ~]# touch /data/nginx/logs/error.log

[root@harbor ~]# touch /data/nginx/logs/access.log

[root@harbor ~]# vim /data/nginx/conf/nginx.conf

[root@harbor ~]# cat /data/nginx/conf/nginx.conf

user root;

worker_processes auto;

error_log /var/log/nginx/error.log notice;

pid /var/run/nginx.pid;

events {

worker_connections 1024;

}

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

keepalive_timeout 5;

upstream test {

server 192.168.1.22:80 weight=1 max_fails=2 fail_timeout=10s; #weight为配置的权重,在fail_timeout内检查max_fails次数,失败则剔除均衡。权重越大转发的请求次数越多

server 192.168.1.22:81 weight=1 max_fails=2 fail_timeout=10s;

}

server {

listen 9080; #此处使用9080端口,80端口已经被harbor占用

server_name localhost;

location / {

root html;

index index.html index.htm;

proxy_pass http://test/; #此处配置为test为上面的负载均衡配置的upstream后面的名称

proxy_set_header Host $http_host;

proxy_set_header Cookie $http_cookie;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For

$proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

client_max_body_size 300m;

}

}

}

[root@harbor ~]# chmod -R 750 /data/nginx/在portainer编排中心配置nginx

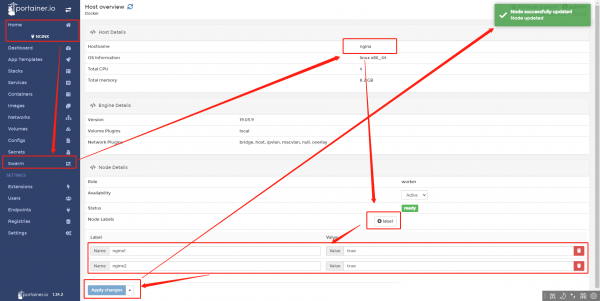

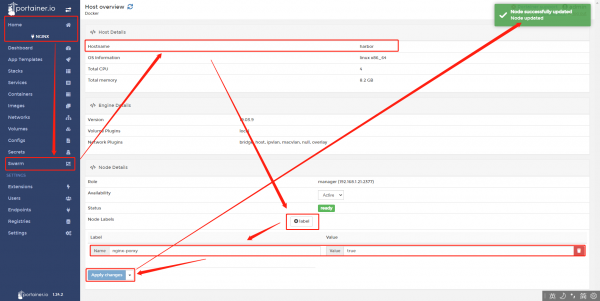

- 首先在swarm的对应服务器添加对应的lable,为什么要添加会在后面说

在harbor服务器添加nginx-proxy等于true的lable

在nginx服务器添加nginx1和nginx2等于true的lable

- 创建nginx-porxy的stack并填写编排文件内容如下:

version: '3.0' #版本

services: #表示下面都是服务

nginx-proxy: #表示服务名为nginx-proxy

image: 192.168.1.21/nginx/nginx@sha256:2f14a471f2c2819a3faf88b72f56a0372ff5af4cb42ec45aab00c03ca5c9989f #镜像链接

ports: #映射端口

- "9080:9080"

volumes: #把本地路径挂载到容器

- /data/nginx/conf/nginx.conf:/etc/nginx/nginx.conf

- /data/nginx/logs/:/var/log/nginx/

networks: #指定网卡

- ens32

deploy: #集群化部署

mode: global #指定mode为global

placement:

constraints:

- node.labels.nginx-porxy == true #规定部署在swarm集群内的nginx-porxy=true的lable机器内

networks:

ens32:- 创建nginx-web的stak并填写如下配置文件

version: '3.0'

services:

nginx1:

image: 192.168.1.21/nginx/nginx@sha256:2f14a471f2c2819a3faf88b72f56a0372ff5af4cb42ec45aab00c03ca5c9989f

ports:

- "80:80"

volumes:

- /data/nginx/conf/conf1/nginx.conf:/etc/nginx/nginx.conf

- /data/nginx/logs/logs1/:/var/log/nginx/

- /data/nginx/index/index1/:/usr/local/web/

networks:

- host

deploy:

mode: global

placement:

constraints:

- node.labels.nginx1 == true

nginx2:

image: 192.168.1.21/nginx/nginx@sha256:2f14a471f2c2819a3faf88b72f56a0372ff5af4cb42ec45aab00c03ca5c9989f

ports:

- "81:81"

volumes:

- /data/nginx/conf/conf2/nginx.conf:/etc/nginx/nginx.conf

- /data/nginx/logs/logs2/:/var/log/nginx/

- /data/nginx/index/index2/:/usr/local/web/

networks:

- host

deploy:

mode: global

placement:

constraints:

- node.labels.nginx2 == true

networks:

host:- 添加完成后点击deploy the stack两个stack的状态都为running的话浏览器访问192.168.1.21:9080,多次刷新可以看到不同的web页面 ,试验成功

常见基础环境的编排文件

- 以下所有编排文件在启动后都需要进入容器添加集群操作、volumes本地路径777

rabbitmq集群

- 编排文件

version: '3.7'

services:

rabbitmq-master:

image: 192.168.1.21/smtjxb-zhsh/rabbitmq:3.8.5-management #镜像地址

hostname: rabbitmq-master #主机名

restart: always #跟随docker进行自动重启

environment:

RABBITMQ_DEFAULT_USER: "root" #账号

RABBITMQ_DEFAULT_PASS: "5UsBWayE@A" #密码

RABBITMQ_ERLANG_COOKIE: "rabbitcookie" #cookie

volumes:

- "/pasc/rabbitmq/rabbitmq/:/var/lib/rabbitmq" #映射文件夹

- "/pasc/rabbitmq/logs:/var/log/rabbitmq/log" #映射日志

deploy:

mode: global #模块使用global

placement:

constraints: [node.labels.rabbitmq-master == true] #在lables里配置的有rabbitmq-master的节点启动

logging:

driver: "json-file"

options:

max-size: "100m"

max-file: "3"

networks:

- host

rabbitmq-slave:

image: 192.168.1.21/smtjxb-zhsh/rabbitmq:3.8.5-management

hostname: rabbitmq-slave

restart: always

environment:

RABBITMQ_DEFAULT_USER: "root"

RABBITMQ_DEFAULT_PASS: "5UsBWayE@A"

RABBITMQ_ERLANG_COOKIE: "rabbitcookie"

volumes:

- "/pasc/rabbitmq/rabbitmq/:/var/lib/rabbitmq"

- "/pasc/rabbitmq/logs:/var/log/rabbitmq/log"

deploy:

mode: global

placement:

constraints: [node.labels.rabbitmq-slave == true]

logging:

driver: "json-file"

options:

max-size: "100m"

max-file: "3"

networks:

- host

networks:

host:

external: true- 进入容器执行的命令

rabbitmqctl stop_app #停止APP

rabbitmqctl reset #重置状态

rabbitmqctl join_cluster rabbit@rabbitmq-master #加入集群

rabbitmqctl start_app #启动redis三主三从,分别创建三个stack每个stack对应一台服务器,每台一个单独的主节点和从节点

# redis1,2

version: "3.7"

services:

redis1:

image: 192.168.1.21/smtjxb-zhsh/redis:5.0.5 #镜像地址

command:

--port 6381 #端口

--cluster-enabled yes

--appendonly yes

--protected-mode no

--cluster-node-timeout 5000

--masterauth e6zdAfY2c@Gp #集群密码

--requirepass e6zdAfY2c@Gp #redis密码

volumes:

- "redis1_data:/data"

deploy:

mode: global

placement:

constraints: [node.labels.redis1 == true] #在labels为redis1的服务器启动

networks:

- host

redis2:

image: 192.168.1.21/smtjxb-zhsh/redis:5.0.5 #镜像地址

command:

--port 6382 #端口

--cluster-enabled yes

--appendonly yes

--protected-mode no

--cluster-node-timeout 5000

--masterauth e6zdAfY2c@Gp #集群密码

--requirepass e6zdAfY2c@Gp #redis密码

volumes:

- "redis2_data:/data"

deploy:

mode: global

placement:

constraints: [node.labels.redis2 == true] #在labels为redis1的服务器启动

networks:

- host

networks:

host:

external: true

name: host

volumes:

redis1_data:

redis2_data:

#redis3,4

version: "3.7"

services:

redis3:

image: 192.168.1.21/smtjxb-zhsh/redis:5.0.5

command:

--port 6381

--cluster-enabled yes

--appendonly yes

--protected-mode no

--cluster-node-timeout 5000

--masterauth e6zdAfY2c@Gp

--requirepass e6zdAfY2c@Gp

volumes:

- "redis3_data:/data"

deploy:

mode: global

placement:

constraints: [node.labels.redis3 == true]

networks:

- host

redis4:

image: 192.168.1.21/smtjxb-zhsh/redis:5.0.5

command:

--port 6382

--cluster-enabled yes

--appendonly yes

--protected-mode no

--cluster-node-timeout 5000

--masterauth e6zdAfY2c@Gp

--requirepass e6zdAfY2c@Gp

volumes:

- "redis4_data:/data"

deploy:

mode: global

placement:

constraints: [node.labels.redis4 == true]

networks:

- host

networks:

host:

external: true

name: host

volumes:

redis3_data:

redis4_data:

#redis5,6

version: "3.7"

services:

redis5:

image: 192.168.1.21/smtjxb-zhsh/redis:5.0.5

command:

--port 6381

--cluster-enabled yes

--appendonly yes

--protected-mode no

--cluster-node-timeout 5000

--masterauth e6zdAfY2c@Gp

--requirepass e6zdAfY2c@Gp

volumes:

- "redis5_data:/data"

deploy:

mode: global

placement:

constraints: [node.labels.redis5 == true]

networks:

- host

redis6:

image: 192.168.1.21/smtjxb-zhsh/redis:5.0.5

command:

--port 6382

--cluster-enabled yes

--appendonly yes

--protected-mode no

--cluster-node-timeout 5000

--masterauth e6zdAfY2c@Gp

--requirepass e6zdAfY2c@Gp

volumes:

- "redis6_data:/data"

deploy:

mode: global

placement:

constraints: [node.labels.redis6 == true]

networks:

- host

networks:

host:

external: true

name: host

volumes:

redis5_data:

redis6_data:zookeeper三台服务器

version: '3.7'

networks:

host:

external: true

services:

zoo1:

image: 192.168.1.21/dataflat/zookeeper:3.5.6 #镜像地址

restart: always

hostname: zoo1 #主机名

deploy:

mode: global

placement:

constraints: [node.labels.smtdata_zk1 == true]

environment:

ZOO_MY_ID: 1

ZOO_SERVERS:

server.1=192.168.1.21:2888:3888;2187 #zookeeper的集群主机配置

server.2=192.168.1.22:2888:3888;2187

server.3=192.168.1.23:2888:3888;2187

extra_hosts:

- "zoo1:192.168.1.21"

- "zoo1:192.168.1.22"

- "zoo1:192.168.1.23"

volumes: #映射文件夹

- /data/smtdata/zoo/zoo/data:/data

- /data/smtdata/zoo/zoo/datalog:/datalog

- /data/smtdata/zoo/zoo/config:/apache-zookeeper-3.5.6-bin/conf

networks:

- host

zoo2:

image: 10.18.18.128/dataflat/zookeeper:3.5.6

restart: always

hostname: zoo2

deploy:

mode: global

placement:

constraints: [node.labels.smtdata_zk2 == true]

environment:

ZOO_MY_ID: 2

ZOO_SERVERS:

server.1=192.168.1.21:2888:3888;2187 #zookeeper的集群主机配置

server.2=192.168.1.22:2888:3888;2187

server.3=192.168.1.23:2888:3888;2187

extra_hosts:

- "zoo1:192.168.1.21"

- "zoo1:192.168.1.22"

- "zoo1:192.168.1.23"

volumes:

- /data/smtdata/zoo/zoo/data:/data

- /data/smtdata/zoo/zoo/datalog:/datalog

- /data/smtdata/zoo/zoo/config:/apache-zookeeper-3.5.6-bin/conf

networks:

- host

zoo3:

image: 10.18.18.128/dataflat/zookeeper:3.5.6

restart: always

hostname: zoo3

deploy:

mode: global

placement:

constraints: [node.labels.smtdata_zk3 == true]

environment:

ZOO_MY_ID: 3

ZOO_SERVERS:

server.1=192.168.1.21:2888:3888;2187 #zookeeper的集群主机配置

server.2=192.168.1.22:2888:3888;2187

server.3=192.168.1.23:2888:3888;2187

extra_hosts:

- "zoo1:192.168.1.21"

- "zoo1:192.168.1.22"

- "zoo1:192.168.1.23"

volumes:

- /data/smtdata/zoo/zoo/data:/data

- /data/smtdata/zoo/zoo/datalog:/datalog

- /data/smtdata/zoo/zoo/config:/apache-zookeeper-3.5.6-bin/conf

networks:

- hostkafka,三台服务器和zookeeper部署在一块

version: '3.7'

networks:

host:

external: true

services:

kafka1:

image: 10.18.18.128/dataflat/kafka:2.12-2.4.0

restart: always

hostname: kafka1

depends_on:

- zoo1

- zoo2

- zoo3

extra_hosts:

- "zoo1:10.18.18.132"

- "zoo2:10.18.18.133"

- "zoo3:10.18.18.134"

- "kafka1:10.18.18.132"

- "kafka2:10.18.18.133"

- "kafka3:10.18.18.134"

environment:

kafkaID: 1

KAFKA_HOST_NAME: kafka1

KAFKA_LISTENERS: PLAINTEXT://:9092

KAFKA_ZOOKEEPER_CONNECT: 192.168.1.21:2187,192.168.1.22:2187,192.168.1.23:2187

#JMX_PORT: 9999

KAFKA_NUM_PARTITIONS: 3

KAFKA_DEFAULT_REPLICATION_FACTOR: 1

KAFKA_LOG_SEGMENT_BYTES: 209715200

KAFKA_LOG_ROLL_HOURS: 24

KAFKA_LOG_RETENTION_BYTES: 5368709120

KAFKA_LOG_RETENTION_HOURS: 96

deploy:

mode: global

placement:

constraints: [node.labels.ka1 == true]

volumes:

- /data/smtdata/kafka/kafka:/kafka

networks:

- host

kafka2:

image: 10.18.18.128/dataflat/kafka:2.12-2.4.0

restart: always

hostname: kafka2 #kafka 的ID

depends_on:

- zoo1

- zoo2

- zoo3

extra_hosts:

- "zoo1:10.18.18.132"

- "zoo2:10.18.18.133"

- "zoo3:10.18.18.134"

- "kafka1:10.18.18.132"

- "kafka2:10.18.18.133"

- "kafka3:10.18.18.134"

environment:

kafkaID: 1

KAFKA_HOST_NAME: kafka2

KAFKA_LISTENERS: PLAINTEXT://:9092 # kafka端口

KAFKA_ZOOKEEPER_CONNECT: 192.168.1.21:2187,192.168.1.22:2187,192.168.1.23:2187

#JMX_PORT: 9999

KAFKA_NUM_PARTITIONS: 3

KAFKA_DEFAULT_REPLICATION_FACTOR: 1

KAFKA_LOG_SEGMENT_BYTES: 209715200

KAFKA_LOG_ROLL_HOURS: 24

KAFKA_LOG_RETENTION_BYTES: 5368709120

KAFKA_LOG_RETENTION_HOURS: 96

deploy:

mode: global

placement:

constraints: [node.labels.ka2 == true]

volumes:

- /data/smtdata/kafka/kafka:/kafka

networks:

- host

kafka3:

image: 10.18.18.128/dataflat/kafka:2.12-2.4.0

restart: always

hostname: kafka3

depends_on:

- zoo1

- zoo2

- zoo3

extra_hosts:

- "zoo1:10.18.18.132"

- "zoo2:10.18.18.133"

- "zoo3:10.18.18.134"

- "kafka1:10.18.18.132"

- "kafka2:10.18.18.133"

- "kafka3:10.18.18.134"

environment:

kafkaID: 1

KAFKA_HOST_NAME: kafka3

KAFKA_LISTENERS: PLAINTEXT://:9092

KAFKA_ZOOKEEPER_CONNECT: 192.168.1.21:2187,192.168.1.22:2187,192.168.1.23:2187

#JMX_PORT: 9999

KAFKA_NUM_PARTITIONS: 3

KAFKA_DEFAULT_REPLICATION_FACTOR: 1

KAFKA_LOG_SEGMENT_BYTES: 209715200

KAFKA_LOG_ROLL_HOURS: 24

KAFKA_LOG_RETENTION_BYTES: 5368709120

KAFKA_LOG_RETENTION_HOURS: 96

deploy:

mode: global

placement:

constraints: [node.labels.ka3 == true]

volumes:

- /data/smtdata/kafka/kafka:/kafka

networks:

- hostes,三台服务器

version: '3.7'

networks:

host:

external: true

services:

es1:

image: 192.168.1.21/dataflat/elasticsearch:7.7.0

restart: always

container_name: es1

environment:

- node.name=es1

- http.port=9200

- transport.tcp.port=9300

- cluster.name=es_cluster

- discovery.seed_hosts=192.168.1.21,192.168.1.22,192.168.1.23

- cluster.initial_master_nodes=192.168.1.21,192.168.1.22,192.168.1.23

#- bootstrap.memory_lock=true

- network.host=192.168.1.21

- "ES_JAVA_OPTS=-Xms8g -Xmx8g"

- http.cors.enabled=true

- http.cors.allow-origin=*

ulimits:

memlock:

soft: -1

hard: -1

deploy:

mode: global

placement:

constraints: [node.labels.smtdata_es1 == true]

extra_hosts:

- es1:192.168.1.21

- es2:192.168.1.22

- es3:192.168.1.23

volumes:

- /data/smtdata/esdb/es1/data:/usr/share/elasticsearch/data:rw

networks:

- host

es2:

image: 192.168.1.21/dataflat/elasticsearch:7.7.0

restart: always

container_name: es2

environment:

- node.name=es2

- http.port=9200

- transport.tcp.port=9300

- cluster.name=es_cluster

- discovery.seed_hosts=192.168.1.21,192.168.1.22,192.168.1.23

- cluster.initial_master_nodes=192.168.1.21,192.168.1.22,192.168.1.23

#- bootstrap.memory_lock=true

- network.host=192.168.1.22

- "ES_JAVA_OPTS=-Xms8g -Xmx8g"

- http.cors.enabled=true

- http.cors.allow-origin=*

ulimits:

memlock:

soft: -1

hard: -1

extra_hosts:

- es1:192.168.1.21

- es2:192.168.1.22

- es3:192.168.1.23

deploy:

mode: global

placement:

constraints: [node.labels.smtdata_es2 == true]

volumes:

- /data/smtdata/esdb/es2/data:/usr/share/elasticsearch/data:rw

networks:

- host

es3:

image: 192.168.1.21/dataflat/elasticsearch:7.7.0

restart: always

container_name: es3

environment:

- node.name=es3

- http.port=9200

- transport.tcp.port=9300

- cluster.name=es_cluster

- discovery.seed_hosts=192.168.1.21,192.168.1.22,192.168.1.23

- cluster.initial_master_nodes=192.168.1.21,192.168.1.22,192.168.1.23

#- bootstrap.memory_lock=true

- network.host=192.168.1.23

- "ES_JAVA_OPTS=-Xms8g -Xmx8g"

- http.cors.enabled=true

- http.cors.allow-origin=*

ulimits:

memlock:

soft: -1

hard: -1

extra_hosts:

- es1:192.168.1.21

- es2:192.168.1.22

- es3:192.168.1.23

deploy:

mode: global

placement:

constraints: [node.labels.smtdata_es3 == true]

volumes:

- /data/smtdata/esdb/es3/data:/usr/share/elasticsearch/data:rw

networks:

- hostes单节点

version: '3.7'

networks:

host:

external: true

services:

elasticsearch:

image: 192.168.1.1/elasticsearch:7.2.0v1

restart: always

container_name: es1

environment:

- node.name=es1

- http.port=9200

- transport.tcp.port=9300

- cluster.initial_master_nodes=192.168.1.1

#- bootstrap.memory_lock=true

- network.host=192.168.1.1

- "ES_JAVA_OPTS=-Xms8g -Xmx8g"

- http.cors.enabled=true

- http.cors.allow-origin=*

ulimits:

memlock:

soft: -1

hard: -1

deploy:

mode: global

placement:

constraints: [node.labels.es == true]

extra_hosts:

- es1:192.168.1.1

volumes:

- /es/data:/usr/share/elasticsearch/data:rw

- /es/plugins:/usr/share/elasticsearch/plugins:rw

networks:

- host

nacos

编排文件内容

version: '3.7'

services:

nacos1:

hostname: nacos-server01

restart: always

image: 192.168.9.136:8080/nacos/nacos-server@sha256:90675cc79bc83ef9cd4c613a87f30cd96c174a668bc1ad099b5923d897678c20

environment:

- MODE=cluster

- PREFER_HOST_MODE=192.168.9.136

- NACOS_SERVERS=192.168.9.136:8848 192.168.9.137:8848 192.168.9.138:8848

- SPRING_DATASOURCE_PLATFORM=mysql

- MYSQL_SERVICE_HOST=101.201.223.213

- MYSQL_SERVICE_PORT=3306

- MYSQL_SERVICE_USER=root

- MYSQL_SERVICE_PASSWORD=

- MYSQL_SERVICE_DB_NAME=nacos_config

volumes:

- /home/nacos/cluster-logs/nacos-server01:/home/nacos/logs

- /home/nacos/init.d:/home/nacos/init.d

networks:

- host

deploy:

mode: global

placement:

constraints: [node.labels.nacos1 == true]

nacos2:

hostname: nacos-server02

restart: always

image: 192.168.9.136:8080/nacos/nacos-server@sha256:90675cc79bc83ef9cd4c613a87f30cd96c174a668bc1ad099b5923d897678c20

environment:

- MODE=cluster

- PREFER_HOST_MODE=192.168.9.137

- NACOS_SERVERS=192.168.9.136:8848 192.168.9.137:8848 192.168.9.138:8848

- SPRING_DATASOURCE_PLATFORM=mysql

- MYSQL_SERVICE_HOST=101.201.223.213

- MYSQL_SERVICE_PORT=3306

- MYSQL_SERVICE_USER=root

- MYSQL_SERVICE_PASSWORD=root

- MYSQL_SERVICE_DB_NAME=nacos_config

volumes:

- /home/nacos/cluster-logs/nacos-server01:/home/nacos/logs

- /home/nacos/init.d:/home/nacos/init.d

networks:

- host

deploy:

mode: global

placement:

constraints: [node.labels.nacos2 == true]

nacos3:

hostname: nacos-server03

restart: always

image: 192.168.9.136:8080/nacos/nacos-server@sha256:90675cc79bc83ef9cd4c613a87f30cd96c174a668bc1ad099b5923d897678c20

environment:

- MODE=cluster

- PREFER_HOST_MODE=192.168.9.138

- NACOS_SERVERS=192.168.9.136:8848 192.168.9.137:8848 192.168.9.138:8848

- SPRING_DATASOURCE_PLATFORM=mysql

- MYSQL_SERVICE_HOST=101.201.223.213

- MYSQL_SERVICE_PORT=3306

- MYSQL_SERVICE_USER=root

- MYSQL_SERVICE_PASSWORD=root

- MYSQL_SERVICE_DB_NAME=nacos_config

volumes:

- /home/nacos/cluster-logs/nacos-server01:/home/nacos/logs

- /home/nacos/init.d:/home/nacos/init.d

networks:

- host

deploy:

mode: global

placement:

constraints: [node.labels.nacos3 == true]

networks:

host:

external: true扫描二维码,在手机上阅读