centos7.6部署lvs+keepalived+nginx高可用

[TOC]

实验环境

-

此文档为本地测试文档为两台服务器部署单双节点的keeplived,docker在两台服务器启动两个nignx做web静态页面,另外启动两个nginx做负载均衡。负载均衡的nginx可以不用改为4个nginx静态

-

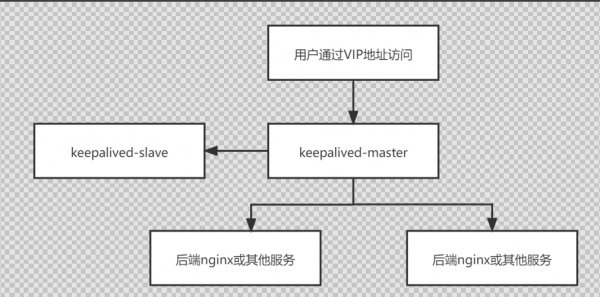

流程图

安装nginx

两台服务器分别执行的基础操作

-

本步骤只说明安装后台代理静态页面的nginx,需要用到dockers,安装docker可以百度自行解决,安装玩docker之后启动容器之前请执行setenforce 0关闭slinux,永久关闭请修改/etc/selinux/config配置文件的SELINUX=disabled

-

下载nginx镜像,两台服务器都需要操作

#直接docker pull nginx表示下载最新版nginx

[root@localhost ~]# docker pull nginx

Using default tag: latest

latest: Pulling from library/nginx

eff15d958d66: Pull complete

1e5351450a59: Pull complete

2df63e6ce2be: Pull complete

9171c7ae368c: Pull complete

020f975acd28: Pull complete

266f639b35ad: Pull complete

Digest: sha256:097c3a0913d7e3a5b01b6c685a60c03632fc7a2b50bc8e35bcaa3691d788226e

Status: Downloaded newer image for nginx:latest-

创建两个nginx的配置文件、日志、首页显示内容,两台服务器分都创建

#创建配置文件、日志、首页显示内容目录,两台服务器都执行 [root@localhost ~]# mkdir -p /data/nginx/conf/conf{1,2} # 此命令将会在 /data/nginx/conf/创建一个con1和一个conf2文件夹 [root@localhost ~]# mkdir -p /data/nginx/logs/logs{1,2} [root@localhost ~]# mkdir -p /data/nginx/index/index{1,2} #创建两个配置文件,两台服务器都执行 [root@localhost ~]# touch /data/nginx/conf/conf{1,2}/nginx.conf - 创建本地日志路径并创建静态页面的内容

#创建两个日志文件

[root@localhost ~]# touch /data/nginx/logs/logs{1,2}/access.log

[root@localhost ~]# touch /data/nginx/logs/logs{1,2}/error.log

#创建一个index.html文件分别执行

[root@localhost ~]# touch /data/nginx/index/index2/index.html

#向两个index.html文件插入,第一台服务器内容为nginx1,第二台为nginx2

[root@localhost ~]# echo nginx1 > /data/nginx/index/index2/index.html

[root@localhost ~]# echo nginx2 > /data/nginx/index/index2/index.html两台nginx的配置文件内容

- 负载均衡配置文件内容如下(可以不用配置负载均衡)

[root@localhost ~]# cat /data/nginx/conf/conf1/nginx.conf #此处为负载均衡配置,后期项目根据实际配置新增内容

user root;

worker_processes auto;

error_log /var/log/nginx/error.log notice;

pid /var/run/nginx.pid;

events {

worker_connections 1024;

}

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

keepalive_timeout 5;

upstream test {

server 192.168.1.21:81 weight=1 max_fails=2 fail_timeout=10s; #weight为配置的权重,在fail_timeout内检查max_fails次数,失败则剔除均衡。权重越大转发的请求次数越多

server 192.168.1.22:81 weight=1 max_fails=2 fail_timeout=10s;

}

server {

listen 80; #此处使用80端口

server_name localhost;

location / {

root html;

index index.html index.htm;

proxy_pass http://test/; #此处配置为test为上面的负载均衡配置的upstream后面的名称

proxy_set_header Host $http_host;

proxy_set_header Cookie $http_cookie;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For

$proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

client_max_body_size 300m;

}

}

}- 静态页面配置如下

[root@localhost ~]# cat /data/nginx/conf/conf2/nginx.conf #此处为静态代理页面配置

user root;

worker_processes auto;

error_log /var/log/nginx/error.log notice;

pid /var/run/nginx.pid;

events {

worker_connections 1024;

}

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

keepalive_timeout 65;

server {

listen 81; #此处使用81端口

server_name localhost;

location / {

root /usr/local/web/; #静态页面目录

}

}

}两台服务器分别启动nginx

#启动静态页面

[root@localhost ~]# docker run -itd --name nginx2 --network host -v /data/nginx/conf/conf2/nginx.conf:/etc/nginx/nginx.conf -v /data/nginx/logs/logs2:/var/log/nginx/ -v /data/nginx/index/index2:/usr/local/web/ nginx:latest

#启动负载均衡

[root@localhost ~]# docker run -itd --name nginx1 --network host -v /data/nginx/conf/conf1/nginx.conf:/etc/nginx/nginx.conf -v /data/nginx/logs/logs1:/var/log/nginx/ -v /data/nginx/index/index1:/usr/local/web/ nginx:latest

docker run:启动容器

-itd:后台启动

--name:指定容器名称

--network:使用的网络host表示本地网址

-v:把本地目录或文件映射到容器,如果容器内没有则会创建

nginx:latest:镜像名称:tag测试是否部署成功

- 为了方便区分把两台服务器的主机名分别改为nginx1和nginx2

第一台执行:

[root@localhost index2]# hostname nginx1

[root@localhost index2]# bash

第二台执行:

[root@localhost index2]# hostname nginx2

[root@localhost index2]# bash

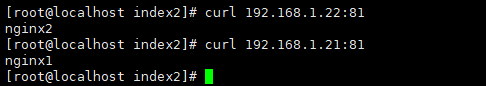

执行完成后断开当前链接并重新链接- 首先分别在两台服务器测试两个静态页面的nginx启动状态及静态页面内容

nginx1测试:

[root@nginx1 ~]# curl 192.168.1.21:81

nginx1

[root@nginx1 ~]# curl 192.168.1.22:81

nginx2

[root@nginx1 ~]#

nginx2测试:

[root@nginx2 ~]# curl 192.168.1.21:81

nginx1

[root@nginx2 ~]# curl 192.168.1.22:81

nginx2

[root@nginx2 ~]#

#可以看到两个静态页面的内容不一样

# 如果访问不通请关闭firewalld.service和iptables防火墙

systemctl stop firealld.service

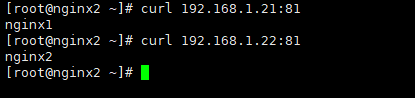

iptables -F- 在两台服务器测试负载均衡配置

nginx1测试

[root@nginx1 ~]# curl 192.168.1.21:80

nginx1

[root@nginx1 ~]# curl 192.168.1.22:80

nginx1

[root@nginx1 ~]# curl 192.168.1.21:80

nginx2

[root@nginx1 ~]# curl 192.168.1.22:80

nginx2

[root@nginx1 ~]#

nginx2测试

[root@nginx2 ~]# curl 192.168.1.21:80

nginx1

[root@nginx2 ~]# curl 192.168.1.21:80

nginx2

[root@nginx2 ~]# curl 192.168.1.22:80

nginx1

[root@nginx2 ~]# curl 192.168.1.22:80

nginx2

[root@nginx2 ~]#

可以看到负载均衡已经配置成功Keepalived

- 在线安装,两个节点都要执行

[root@nginx1 ~]# yum -y install ipvsadm keepalived

[root@nginx1 ~]# modprobe ip_vs - 离线rpm安装

下载rpm包下载地址:https://rely.jishuliu.cn/rpm/lvs.tar.gz

[root@nginx2 tools]# wget https://rely.jishuliu.cn/rpm/lvs.tar.gz

[root@nginx2 tools]# tar xf lvs.tar.gz

[root@nginx2 tools]# cd lvs+keepalived/

[root@nginx2 tools]# rpm -Uvh --force --nodeps *.rpm

等待安装完成即可- 配置keepalived

修改内核

[root@nginx1 ~]# cat >> /etc/sysctl.conf << start

> net.ipv4.conf.all.arp_ignore =1

> net.ipv4.conf.all.arp_announce = 2

> net.ipv4.conf.default.arp_ignore = 1

> net.ipv4.conf.default.arp_announce = 2

> net.ipv4.conf.lo.arp_ignore = 1

> net.ipv4.conf.lo.arp_announce = 2

> start

[root@nginx1 ~]# sysctl -p

net.ipv4.conf.all.arp_ignore = 1

net.ipv4.conf.all.arp_announce = 2

net.ipv4.conf.default.arp_ignore = 1

net.ipv4.conf.default.arp_announce = 2

net.ipv4.conf.lo.arp_ignore = 1

net.ipv4.conf.lo.arp_announce = 2

[root@nginx1 ~]#

[root@nginx1 ~]# cd /etc/keepalived/ #进入keepalived的配置文件目录

[root@nginx1 keepalived]# ls

keepalived.conf

[root@nginx1 keepalived]# cp keepalived.conf bak.conf #备份配置文件

[root@nginx1 keepalived]# vim keepalived.conf #编辑配置文件把原内容删除添加如下内容- keepalived主节点配置

#以下为VIP配置

global_defs {

router_id HA_TEST_R1 #机器标识

}

vrrp_instance VI_1 {

state MASTER #MASTER为主节点,BACKUP为备用节点

interface eth0 #vip添加到那块网卡

virtual_router_id 1 #指定VRRP实例ID,范围是0-255

priority 100 #优先级

advert_int 1 #指定发送VRRP通告的间隔。单位是秒。

authentication {

auth_type PASS #指定认证方式。PASS简单密码认证(推荐),AH:IPSEC认证(不推荐)。

auth_pass 1111 #指定认证所使用的密码。最多8位。

}

virtual_ipaddress {

192.168.1.23 #VIP地址,虚拟IP地址

}

}

#以下为地址池配置

virtual_server 192.168.1.23 80 { #vip的地址和端口

delay_loop 6 #健康检查的时间间隔

lb_algo rr #LVS调度算法

lb_kind DR #LVS模式

protocol TCP #协议类型

real_server 192.168.1.21 80 { #后端nginxIP和端口

weight 1 #权重,类似于nginx的负载均衡

TCP_CHECK {

connect_port 80 #连接的端口。

connect_timeout 3 #连接超时时间。默认是5s。

nb_get_retry 3 #get尝试次数

delay_before_retry 4 #在尝试之前延迟多长时间

}

}

real_server 192.168.1.22 80 { #后端nginxIP和端口

weight 1 #权重,类似于nginx的负载均衡

TCP_CHECK {

connect_port 80 #连接的端口

connect_timeout 3 #连接超时时间。默认是5s。

nb_get_retry 3 #get尝试次数

delay_before_retry 4 #在尝试之前延迟多长时间

}

}

}- keepalived备份节点配置

global_defs {

router_id HA_TEST_R2

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

virtual_router_id 1

priority 99

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.1.23

}

}

virtual_server 192.168.1.23 80 {

delay_loop 6

lb_algo rr

lb_kind DR

protocol TCP

real_server 192.168.1.21 81 {

weight 1

TCP_CHECK {

connect_port 81

connect_timeout 3

nb_get_retry 3

delay_before_retry 4

}

}

real_server 192.168.1.22 81 {

weight 1

TCP_CHECK {

connect_port 81

connect_timeout 3

nb_get_retry 3

delay_before_retry 4

}

}

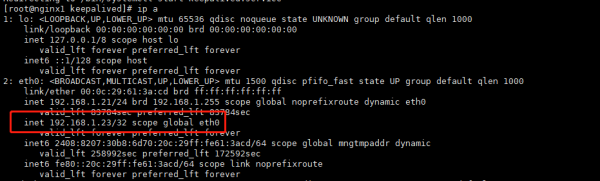

}- service keepalived start启动keepalived,启动完成后可以在MASTER节点看到eth0的网卡内多了个192.168.1.23的IP地址

- 访问vip地址加端口测试

- 第一次访问返回如下

- 刷新后返回如下

- 关闭掉主节点的keepalived后可以看到vip自动切换到备用节点

主节点,在停止keepalived之后VIP自动消除并且切换到备份节点:

[root@nginx1 keepalived]# ip a |grep inet

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

inet 192.168.1.21/24 brd 192.168.1.255 scope global noprefixroute dynamic eth0

inet 192.168.1.23/32 scope global eth0

inet6 2408:8207:30b8:6d70:20c:29ff:fe61:3acd/64 scope global mngtmpaddr dynamic

inet6 fe80::20c:29ff:fe61:3acd/64 scope link noprefixroute

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

[root@nginx1 keepalived]#

Redirecting to /bin/systemctl stop keepalived.service

[root@nginx1 keepalived]# ip a |grep inet

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

inet 192.168.1.21/24 brd 192.168.1.255 scope global noprefixroute dynamic eth0

inet6 2408:8207:30b8:6d70:20c:29ff:fe61:3acd/64 scope global mngtmpaddr dynamic

inet6 fe80::20c:29ff:fe61:3acd/64 scope link noprefixroute

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

[root@nginx1 keepalived]#

备用节点:

[root@nginx2 keepalived]# ip a|grep inet

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

inet 192.168.1.22/24 brd 192.168.1.255 scope global noprefixroute dynamic eth0

inet 192.168.1.23/32 scope global eth0

inet6 2408:8207:30b8:6d70:20c:29ff:fe48:d4ff/64 scope global mngtmpaddr noprefixroute dynamic

inet6 fe80::20c:29ff:fe48:d4ff/64 scope link noprefixroute

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

[root@nginx2 keepalived]#

在重启主节点的keepalived之后可以看到vip又会自动切换回主节点在这个自动切换的过程中是可以继续访问vip的- 负载均衡测试

#在第一台服务器curl vip测试地址和端口

[root@nginx1 ~]# curl 192.168.1.23

nginx1

[root@nginx1 ~]# curl 192.168.1.23

nginx2

[root@nginx1 ~]# curl 192.168.1.23

nginx1

[root@nginx1 ~]# curl 192.168.1.23

nginx2

[root@nginx1 ~]#

#可以看到keepalived是分发到两个nginx节点的,接下来我们把nginx1服务器的后端页面nginx停掉再次curl测试

[root@nginx1 ~]# docker stop nginx2

nginx2

[root@nginx1 ~]# ls

anaconda-ks.cfg tools

[root@nginx1 ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

5ba24ff7a83a nginx:latest "/docker-entrypoint.…" 2 hours ago Up 2 hours nginx1

[root@nginx1 ~]# curl 192.168.1.21:81 #测试第一台服务器的后端服务器的后端端口是否还能访问,可以看到端口已经不通了。

curl: (7) Failed connect to 192.168.1.21:81; Connection refused

#再次curlvip测试跟刚才的结果有什么不一样的地方

[root@nginx1 ~]# curl 192.168.1.23

nginx2

[root@nginx1 ~]# curl 192.168.1.23

nginx2

[root@nginx1 ~]# curl 192.168.1.23

nginx2

[root@nginx1 ~]# curl 192.168.1.23

nginx2

[root@nginx1 ~]#

#可以看到现在keepalived已经不会再分发到第一台服务器了

#接下来启动第一台服务器的后端nginx端口,启动完成后再次curl vip的地址

[root@nginx1 ~]# docker start nginx2

nginx2

[root@nginx1 ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

5ba24ff7a83a nginx:latest "/docker-entrypoint.…" 2 hours ago Up 2 hours nginx1

02f47b4cc1ee nginx:latest "/docker-entrypoint.…" 3 hours ago Up 1 second nginx2

[root@nginx1 ~]# curl 192.168.1.21:81

nginx1

#启动后curl测试以下端口是否能访问,可以看到端口已经可以访问了,接下来再次测试curlvip

[root@nginx1 ~]# curl 192.168.1.23

nginx2

[root@nginx1 ~]# curl 192.168.1.23

nginx1

[root@nginx1 ~]# curl 192.168.1.23

nginx2

[root@nginx1 ~]# curl 192.168.1.23

nginx1

[root@nginx1 ~]#

#可以看到负载又会自动加载扫描二维码,在手机上阅读

版权所有:小破站

文章标题:centos7.6部署lvs+keepalived+nginx高可用

文章链接:https://www.jishuliu.cn/?post=3

本站文章均为原创,未经授权请勿用于任何商业用途

文章标题:centos7.6部署lvs+keepalived+nginx高可用

文章链接:https://www.jishuliu.cn/?post=3

本站文章均为原创,未经授权请勿用于任何商业用途